The AI: More Than Human exhibition at the Barbican was hit and miss. On the whole it is undoubtedly a very interesting exhibition to go to, but not mind blowing. If you’re at all interested in AI you would be familiar with most of the work in there, from Charles Babbage’s Analytical Engine to Memo Akten’s Learning to See, so there aren’t any amazing secret’s in there to discover. But the format of the show in itself is an interesting thing to see.

For me the best bits were seeing a lot of the works, or means of display breaking down. We witnessed 3-4 different exhibits have to be restarted to get them going again (which seemed like a daily occurence to the staff), and many of the lovely looking Dell touchscreens either not responding well, or just not working.

(The screen froze when it asked me to pull ‘my worst face’. Coincidence? I think not.)

(The screen froze when it asked me to pull ‘my worst face’. Coincidence? I think not.)

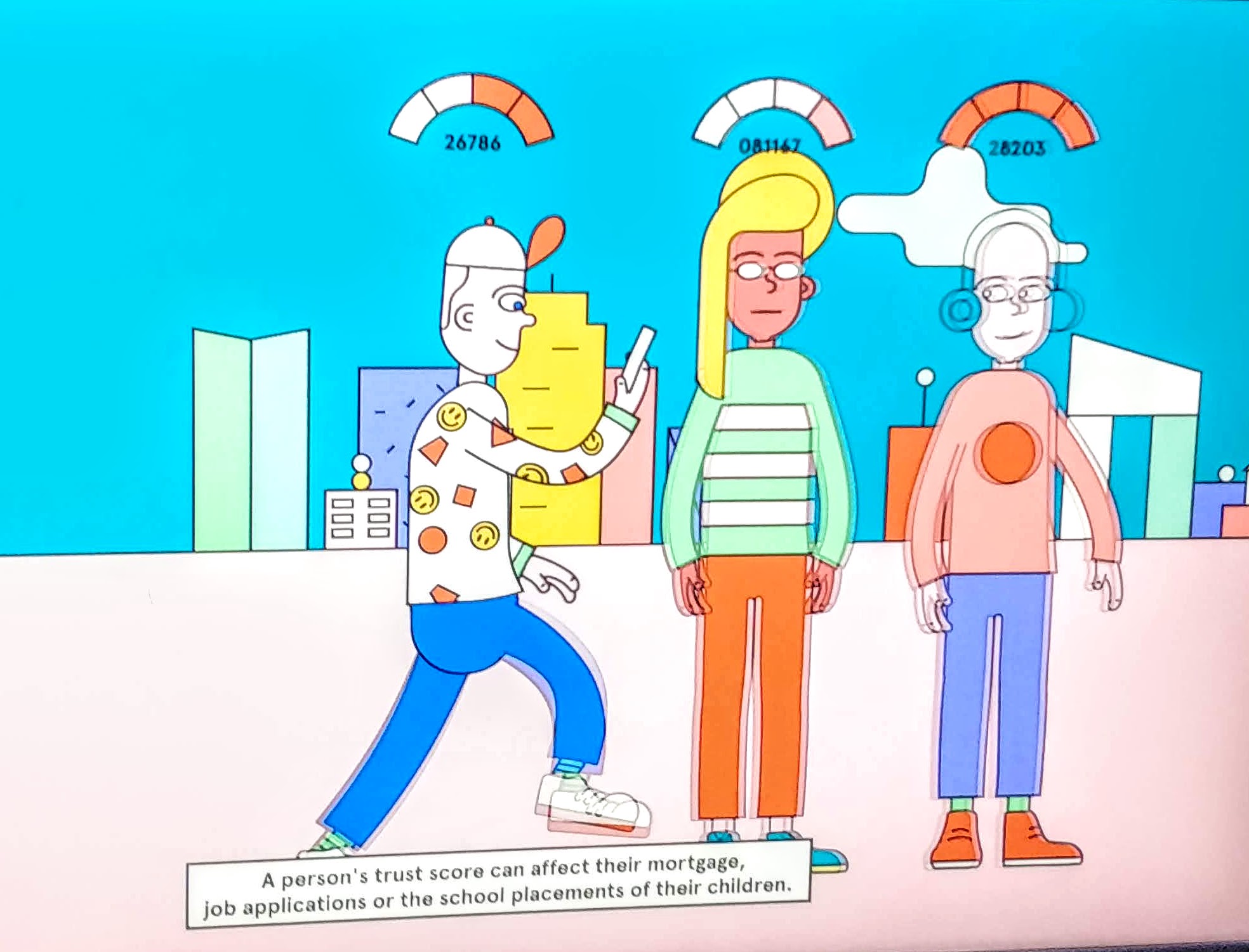

This was part of a really well mae animation about AI in China. Contained some pretty wild claims of the Chinese governements plans for it’s City Brain concept which will manage traffic flows with smart traffic lights and the like, but also monitor faces cross-referenced to a full database of it’s citizens faces to help “find missing children” and track criminals. Dark 1984 kinda stuff.

This was part of a really well mae animation about AI in China. Contained some pretty wild claims of the Chinese governements plans for it’s City Brain concept which will manage traffic flows with smart traffic lights and the like, but also monitor faces cross-referenced to a full database of it’s citizens faces to help “find missing children” and track criminals. Dark 1984 kinda stuff.

I’ve always enjoyed these kinds of comutational farming projects. Seems like an easy application of IoT tech with plenty of sensors, computer vision and some pumps and motors just for a laugh.

I’ve always enjoyed these kinds of comutational farming projects. Seems like an easy application of IoT tech with plenty of sensors, computer vision and some pumps and motors just for a laugh.

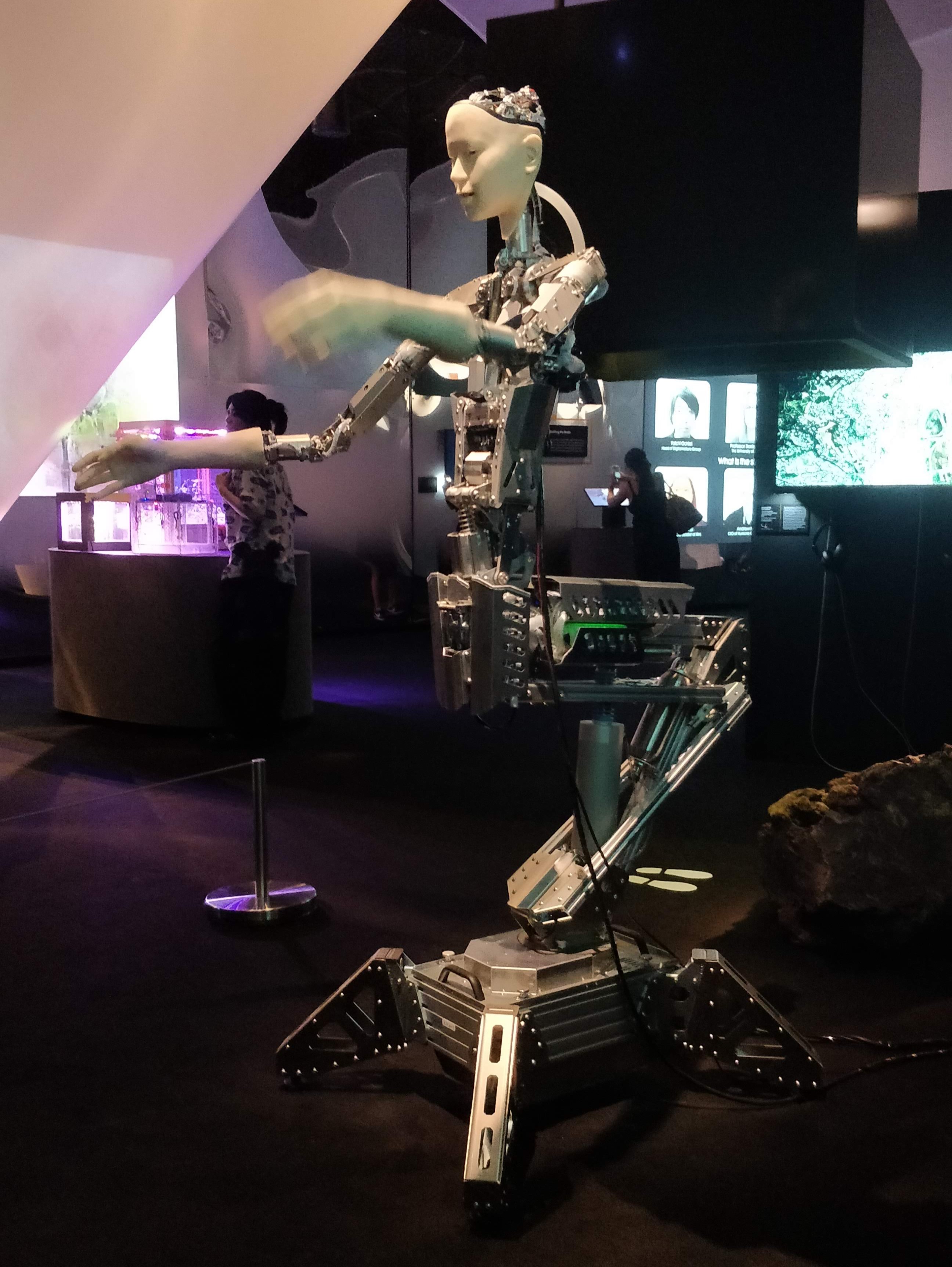

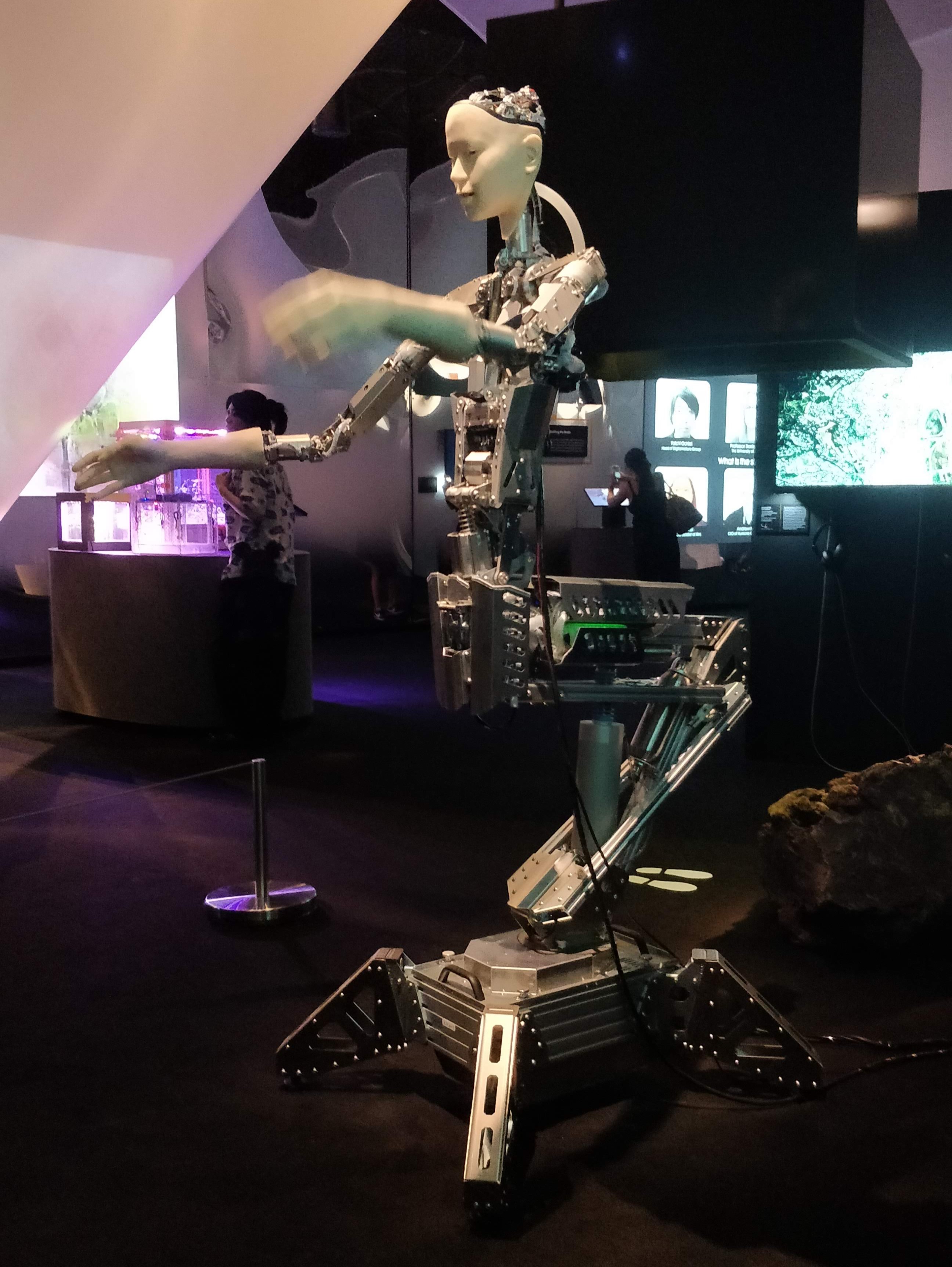

And then then obligatory robot flailing it’s weird arms around and twitching it’s sketchy fingers with a creepy prosthetic face, doing it’s thing.

And then then obligatory robot flailing it’s weird arms around and twitching it’s sketchy fingers with a creepy prosthetic face, doing it’s thing.

It was really nicely made though, I like the sheet steel/ali with aluminium extrusion.

It was really nicely made though, I like the sheet steel/ali with aluminium extrusion.

I was also quite interested by the coice of content. There is still a lot of focus on the abstract representations of ‘what a computer sees’ or ‘how a computer thinks’. An example was of a computer “watching” American Beauty, which the viewer could listen to with headphones, whilst drawing out a line in 3D space on a white background. This was meant to highlight the differences in how a computer processes the film compared to how a human does. Complete nonsesnse, it’s no more interesting than switching sensors around on an Arduino and getting a buzzer to sound when you get a text, or a motor to turn when someone tweets you. Perhaps there is still a lack of understanding among the general public about the concept of the algorithm and what that actaully means. A computer only “sees” what we tell it to see, and then only “reacts” how we terll to react. Even in machine learning applications the scope for it to do something unusual is very limited. AlphaGo playing a rouge move early in the groundbreaking match against Lee Sedol was unusual and [don’t get me wrong] fascinating, but it still played a move on the Go board, as expected. It didn’t tell Sedol to turn around and then spit in his coffee. I guess I wasn’t shocked or suprised by anything in the exhibition, but I wonder if the general public were.

Josh